As the staggering growth of streaming viewership and consumption has been halted by Netflix’s meltdown at the start of 2022, streaming operators face one major challenge: ensuring streaming workflows are scalable both upwards and downwards. They need to allow for enough flexibility to cope with changing demand patterns of viewers, who expect high QoE and are well versed in cancelling subscriptions and switching to a competitor. Moving your streaming workflow to the cloud is a popular and excellent solution, but what are the true benefits? Should you move all components to the cloud? Plus, how do you execute this transition efficiently without jeopardising your QoE? In this guide, we will answer all of these questions and more.

- Current state of live streaming workflows

- Benefits of moving your live streaming workflow to the cloud

- So should you move your streaming workflow to the cloud?

- How to decide what streaming workflow components to migrate

- How to move your live streaming workflow to the cloud

Current state of live streaming workflows

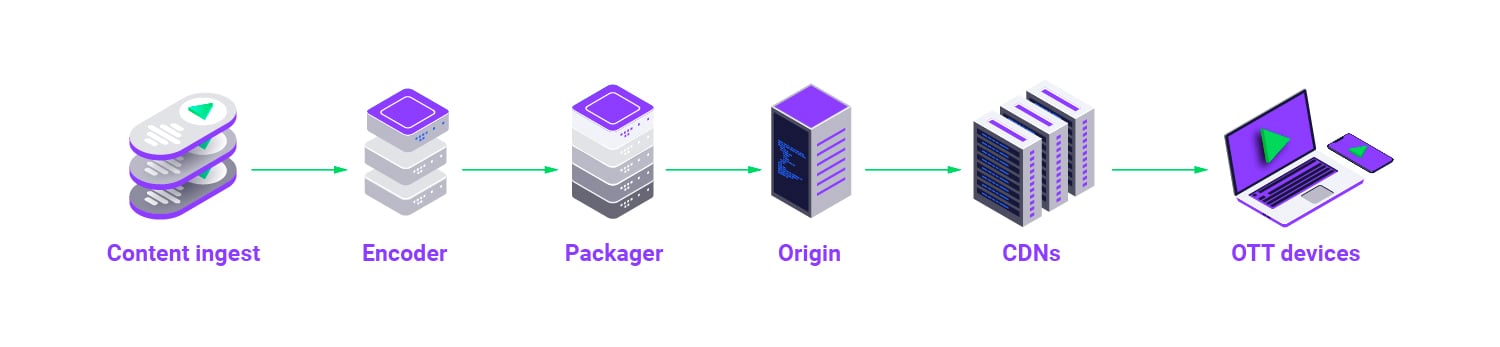

As a process itself, a streaming workflow is fairly simple. All you need to do is capture the video, compress it, package it into a file format (i.e. CMAF, HLS, DASH), ingest and transcode it, and then deliver it to viewers for playback. However, in reality, it’s much more complex than that.

Viewers demand high-quality streams with low latency and no buffering. Moreover, with ample competing content providers on the market, they’re not very forgiving if you fail to deliver. One error anywhere in your streaming workflow directly affects the QoS negatively, which translates to churn and ultimately loss of revenue.

Besides the QoS pressure from viewers, managing streaming workflows comes with several additional challenges. There is an awful lot of different technology involved, a lack of standardisation leading to data fragmentation, and finally, everything keeps evolving and changing.

With streaming operators constantly working out how to get an edge over their competitors, one massive trend has emerged in the past years: moving live streaming workflows to the cloud. However, does it make sense for every streaming company to follow suit? How can you determine that it’s beneficial to you? If it is, what are the best practices to make the move go as smoothly as possible?

Benefits of moving your live streaming workflow to the cloud

As streaming technologies have become virtualised both pre- and post-pandemic, a fundamental issue has been exposed: the current approach to streaming workflow monitoring isn’t flexible enough to track both hardware and software components. For that, the very monitoring technologies need to become cloud-based as well. In fact, streaming workflows are already increasingly moving to cloud-based technologies. The reason for this is that this provides several benefits for streaming operators.

For instance, by embracing virtualised versions of your technologies, like encoders, you’re shifting costs to operational expenditures, meaning you can now only pay for a server as it’s needed. This is in stark contrast to the industry’s heavy reliance on capital expenditure in the past–to acquire physical encoders, servers, and other machines–when video was delivered either by transmission over terrestrial lines or through physical media.

The resulting elasticity provided by the cloud also enables better scalability and its redundant nature ensures reliability of the service for viewers. Moreover, you no longer need to worry about maintenance or updates. Everything is being handled by the cloud provider and the technology company providing the virtualised component. Finally, cloud services enable you to get to market much faster, a crucial factor given the growth of new OTT offerings over the past few years.

So should you move your streaming workflow to the cloud?

While most streaming providers have already been embracing the cloud, they still recognise the still-present need for on-premise equipment and third-party providers for specific cases. So although everything can probably be virtualised, sometimes it makes sense to keep some elements of the streaming workflow behind the corporate firewall. For example, it might make more sense to have initial encoding for a live stream happen on physical machines that can be more closely managed and aren’t subject to the potential latency of cloud resources. The workflow might look something like a rack of encoders producing a master stream and then sending it to cloud-based encoding resources for transcoding and repackaging. Then, from the cloud, the finalised streams could be moved to a content delivery network, either part of the same cloud as the encoders or an entirely different network.

So, in general, moving streaming workflows to the cloud is beneficial, but that doesn’t mean that you should virtualise everything. In the next section, we’ll show you how to determine when to virtualise a workflow component and when to replace one cloud-based component with a different distributed version.

How to decide what streaming workflow components to migrate

No streaming operator is equal; each has its own unique legacy infrastructure, finds itself at different stages of the digital transformation, and has different competitive advantages, markets, and goals. Therefore, there is no one-size-fits-all recommendation. This means you’ve got to first assess your current setting, then decide for each workflow component whether it makes sense to move it, and finally, analyse if and how it could be monitored if moved to the cloud.

1. Assess where you currently stand

Just like any technology, some companies are more advanced than others. So you might have just learned to adapt the cloud better to your streaming needs or you may already be employing the latest best practices and cloud technology to leverage its full potential. Either way, understanding where you stand in terms of cloud adoption and usage right now is key to planning out the future of your technology stack and streaming workflows.

Cloud KPIs: adoption vs. usage

Understanding to what extent your organisation is “cloudified” (yes, that’s now copyright) is important for two reasons. First, it’s strategic because it helps you find out how you can better use cloud technology to improve the efficiency, resiliency, and performance of your streaming service long-term. Second, it’s tactical because it lets you identify how you can employ cloud technologies right now to improve your engineering efforts.

For example, moving from specific hardware, such as encoders, to virtualised instances can expose APIs which provide programmatic control over encoding functionality. This can result in better-engineered software. Moreover, in the long-term, virtualisation as part of the overall video technology stack can ensure improved scalability, resiliency, and even lower overall operational cost.

However, there is a distinction that needs to be made when thinking of those two reasons. The first is all about adoption. Making the decision to migrate from server-based to cloud-based technologies is a strategic one, but it doesn’t dictate the way the cloud is employed in engineering efforts. It could be virtualisation or it could be serverless functions.

The second reason is focused on usage. Even if the strategic decision hasn’t been made to migrate to the cloud long-term, individual cloud technologies can be employed immediately to solve specific challenges or gain efficiencies within the workflow.

The bottom line? Measuring how well you are adopting and using cloud technologies will give you a clear picture of both your immediate and long-term opportunities.

Measuring cloud adoption within streaming workflows

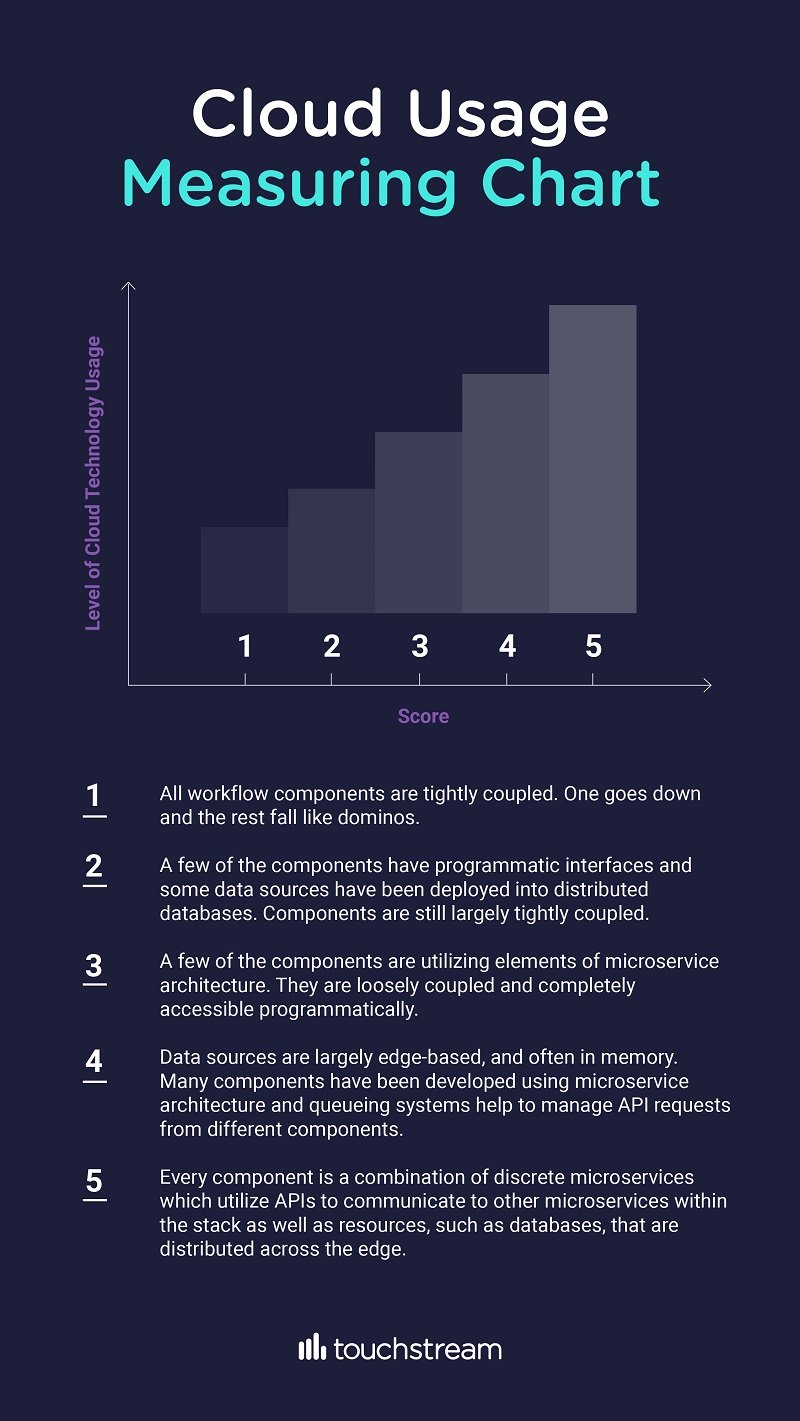

So how do you measure your adoption? Part of that measurement is about technology selection. There are many different technologies within the cloud and as mentioned, you could be just virtualising components of the workflow or you could be embedding them within the very fabric of the cloud through serverless functions. However, they are both cloud technologies. To help you measure your adoption of cloud technologies, consider the following scale:

Measuring cloud usage within streaming workflows

Measuring usage is similar to measuring adoption. There are lots of ways you can utilise cloud technologies as part of the workflow–even if your adoption of the cloud overall is relatively low.

Using your scores to drive change

Once you score your development efforts and technology stack, you should have a good idea of where you currently stand in terms of developing a scalable, resilient, and high-performing streaming service while providing a clear roadmap for moving forward with cloud technologies. By combining the results of this subjective assessment with data from your streaming service, you can quantify how cloud technology adoption and usage could impact your subscriber growth, user engagement, attrition, advertising revenue, and more. For example, if you are seeing QoE and engagement data drop as simultaneous users increase, then by transitioning workflow components from hardware to cloud, from virtualised to serverless, or from traditional to microservice architecture, you can improve those metrics in the future.

Assessing cloud technologies

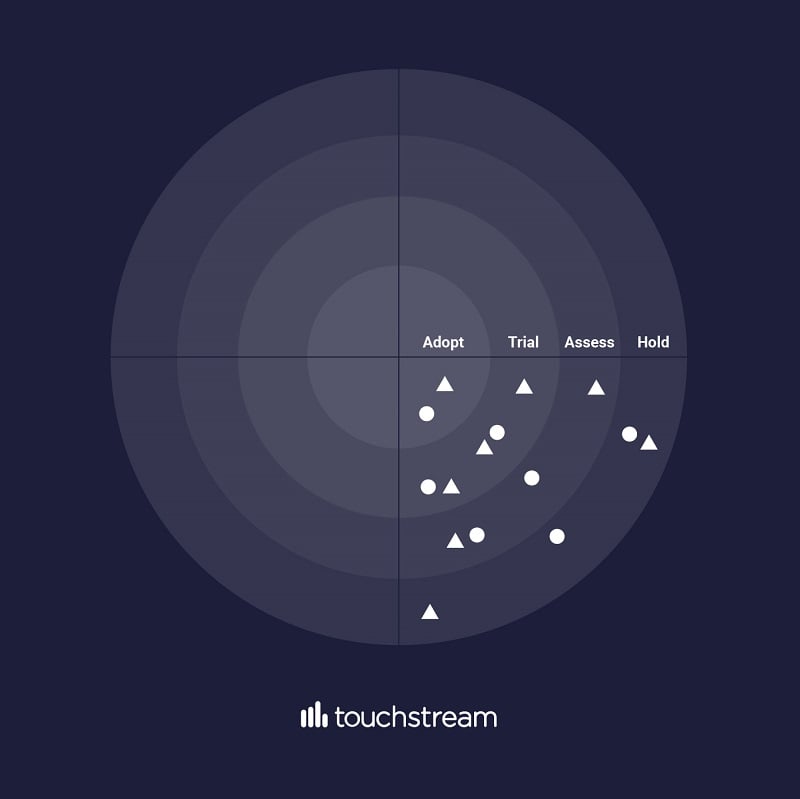

Even before you apply cloud adoption to your strategy or implement cloud technologies into your workflow, it helps to assess the landscape. The best way to do that is through a tech radar.

A tech radar can help you bucket technologies into categories so that your engineering teams aren’t wasting time figuring out which technology to look at. The radar will take into account the current state of the technology within the market and provide clear guidance across your entire organisation. Imagine a tech radar for video stream monitoring. An AI-based approach might be in the Assess Layer (because it’s still not totally proven and there’s a lot of iteration within the technology) while a microservice-based approach, such as Touchstream, might be in the Trial or even Adopt phase. You can also utilise Architecture Decision Records (ADRs) to capture and document decisions for technology selection so that development teams and even individual engineers understand why something was chosen.

The key to successful adoption and usage

Any streaming operator can adopt cloud technologies throughout their stack. They can also utilise cloud technologies within their development efforts to improve software scale and resiliency. However, to make those technologies part of the very DNA of your streaming development efforts, everyone needs to be on the same page. That really happens through the discussions and collaboration enabled by Tech Radars and ADRs. When everyone is moving in the same direction about developing within the cloud and with cloud technologies, you’ll find those adoption and usage scores improving–and the success of your platform doing the same.

2. Check if migrating a component actually makes sense

The migration of streaming video components to the cloud, and from one cloud technology (such as virtualisation) to another (like serverless functions), is a natural evolution of OTT streaming architectures: the need for scalable and resilient services for a global user base. However, migrating technologies shouldn’t be taken lightly. Yes, the architecture needs to be able to grow efficiently and effectively based on audience demand, but it may not make sense for a component to be virtualised, turned into a microservice, or even made into a serverless edge function.

The first step is to determine the operational benefit of migrating the component. Will it have a meaningful impact on key metrics such as video startup times, rebuffer ratio, and bitrate changes? Also, will transitioning the component make it easier to support? If the answer is “yes” to both questions, then it makes sense to plan a path for migration.

3. Ensure you’ll still be able to monitor it

The second step, though, can complicate that path: determining how to monitor the new version. When the migration is from hardware to software, or from software to cloud, this can be a significant challenge as the transition could involve an entirely new approach (such as replacing hardware probes with software versions; a type of transition in and of itself). Of course, having a monitoring harness in place can make things much easier as the new version can be programmatically connected to the harness, enabling operations to continue using existing dashboards and visualisations. In contrast, if no harness has been established, understanding the monitoring implications of the technology transition is critical to continuing that path of migration. No way to integrate the new version into existing monitoring systems will make it more difficult to achieve observability.

Once you’ve identified what workflow components to move to the cloud, the next step is to plan and determine how to execute the transmission.

How to move your live streaming workflow to the cloud

A new approach to monitoring is needed

Moving streaming workflows to the cloud requires a different strategy for monitoring. In the past, almost all of the equipment for streaming was on-premises. Because of this, monitoring could make use of hardware probes, also installed in the same network. Additionally, although these probes provided solid data for streaming operators to examine stream performance, they were ultimately limited in three ways. First, they were hardware so they required maintenance and software updates. Second, they couldn’t monitor any resources outside the network, which was problematic as streaming workflow technologies were being virtualised. Third, they couldn’t monitor third-party resources like content delivery networks which were becoming increasingly important to deliver a great streaming experience.

As a result, a new approach to monitoring is needed, one based on two core pillars: data acquisition and data visualisation. In that first pillar, it is critical for streaming providers to employ technology that captures data from on-premise equipment, virtualised technology in the cloud, and third-party providers. However, that data is useless if it can’t be correlated together.

Moreover, that can’t happen if the monitoring of those three areas is handled by different monitoring technologies which provide no means of integration. That’s why data visualisation is the second pillar. Just as the approach to acquiring the data must change to accommodate these different groups of technologies within the hybrid streaming workflow, a data visualisation strategy must address how to connect the data together into a single view. This can really only be done through a monitoring approach built on API data consumption.

Moving monitoring to the cloud

The natural place for this new approach to monitoring is the cloud, rather than keeping monitoring behind the corporate firewall where, if it’s built on API data consumption, it can become a security risk as well as create accessibility issues in the event people are out of the physical office. However, a monitoring solution in the cloud, a dashboard on top of a data repository that can easily connect to any API, allows anyone to access critical operational data about any component within the streaming workflow. Furthermore, it’s inherently flexible. Rather than relying on hardware-based probes, monitoring in the cloud (as part of a MaaS solution) can employ software agents that can be easily integrated with virtualised workflow components. This makes the entire monitoring architecture extremely flexible for data acquisition, especially with components and third parties that support that API integration.

Because the agents are software- and cloud-based (encapsulated as a microservice, for example), they are extremely scalable. If your workflow capacity expands at some point, say needing more caches, the agents can expand as well to ensure that there is no single point of failure for acquiring all the data. This, of course, can’t happen with physical hardware probes which may tip over when flooded with data. What’s nice about this approach, though, is that these same microservice agent containers can be deployed internally as well. Yes, you’ll require some hardware behind the firewall to install them–such as a pair of redundant NGINX servers–but once deployed, they could easily collect data from on-premise equipment that supports API integration and relay it back to the cloud-monitoring platform.

With all the data going back to a cloud-based data lake, it’s easy to connect a cloud-based visualisation tool (like Datadog or Tableau) that allows for significant customisation. You can build whatever dashboard you want, normalise the data in a way that best fits your operations and business needs, and provide access to anyone. That last benefit is key because people are no longer tied to being in the building to see the data–operations people can be anywhere, using any device, to do root-cause analysis.

As you can probably garner by now, a cloud-based monitoring approach, using containerised, microservice agents deployable anywhere with API integration, is highly flexible and scalable. Instead of being tied to a specific platform or operating system, the agents can be deployed in any environment that supports containers, which allows for inherent elasticity as well. Agents don’t tip over because they are overwhelmed. Business logic attached to the container simply spins up a new microservice on a different thread to handle the new demand. This kind of flexibility is critical in an environment such as streaming where traffic can fluctuate wildly.

It’s important that this kind of monitoring approach, employing agents across cloud, on-premise, and third-party, takes into account best practices. A lot of work has already been done in organisations, like the Streaming Video Alliance, to identify and document considerations that must be addressed, such as an end-to-end monitoring solution.

If you want to find out more about how Touchstream performs these tasks, watch our latest video.

Planning is critical

Monitoring can be an open platform. It doesn’t have to exist behind the corporate firewall, tied to screens hanging on a NOC wall. It can leverage the cloud, employ distributed microservice agents to integrate with data sources through the workflow, and provide visualisation consumable on any device, anywhere. However, to make this happen, planning must be a priority. You need to think about which of your workflow technologies can be virtualised, which can be fulfilled by third parties, and how you will get the data from each of those. Once you have that list, you can then assess it against your current approach to monitoring and plan for a clear transition to a cloud-based strategy. In addition, it’s important to select a visualisation tool that will support your long-term needs. Can it normalise the data in the way you need? Is it customisable enough to meet your operations and business needs? Does it integrate with the datasets you want to use (we use Datadog at Touchstream because they have plugins for CDNs and Elemental encoders)? Doing this kind of planning will ensure continuity: even as you expand your monitoring efforts, you can continue to see the important data.

It’s important to remember too that the ultimate objective when planning and implementing a cloud-based monitoring approach is being able to identify the root cause of streaming issues. With agents deployed across your cloud and on-premise infrastructure, gathering data from all workflow components, it’s much easier to view granular details even down to the individual video session. Moreover, with a customised dashboard, KPI thresholds that have been exceeded can be seen, and acted upon, via a smartphone.

The reality of parallel workflows

There are many considerations to make when transitioning to a new version of workflow technology. Operationalisation is critical: can it be monitored, managed with existing CI/CD pipelines, etc? There’s also the business to consider. The streaming platform, whether just out of the gate or well established, has paying subscribers. There are viewers with expectations of consistency, quality of experience, and reliability. This means that an operator can’t simply cut over from on-premise equipment or software to a cloud-based version or replace one cloud-based version with a different one. The two versions must operate side-by-side so the monitoring infrastructure, built to handle the previous version, can be adapted and modified. There shouldn’t be a complete cut-over until it’s been established that the new, cloud-based versions can support existing viewership.

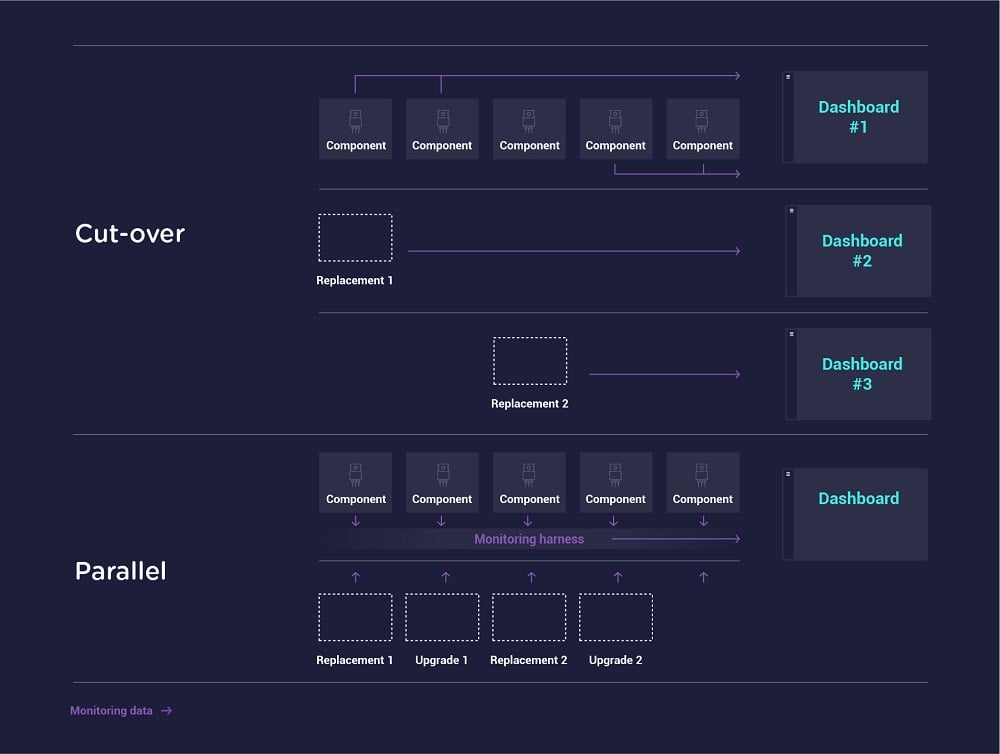

Overview of two different streaming video workflow strategies: cut-over vs. parallel

The old is new again

As hardware becomes virtualised and software moves from servers to cloud-based instances, operators might be inclined to decommission the old equipment or the previous version of the component. However, there are benefits to keeping it around.

The cloud, although highly distributed, isn’t disaster-proof. Even though your video technology stack components are now scalable and elastic, the cloud can still go down. There have been documented instances of even large cloud providers, like Amazon, going offline for a couple of hours.

Remember those encoding boxes, stacked to the ceiling? Rather than dismantling them, they can be used as a critical part of a redundancy strategy. If there does happen to be a cloud failure, then requests can fall back to the older hardware-based components within your data centres. This could include purpose-built boxes, like encoders, or more commodity boxes, such as caches. Yes, viewers may experience a bit more lag (although employing commercial CDNs as part of delivery can help mitigate that) and it will cost you extra to have both systems running simultaneously, but at least the content will continue to flow until cloud resources can be brought back online in the event of an outage. You can even deliver a small percentage of production traffic out of the redundant system (keeping it “warm”) to regional subscribers so there are no latency issues with request response times.

Embracing transition

Being in a constant state of transition, moving from one version of streaming technology to another, is just part of the reality now. However, it’s more than just migrating protocols or codecs. It’s also about virtualisation and serverless functionality. It’s about embracing the cloud, the edge, the mist and the fog. Transition within streaming workflows is about the very nature of the technology. Building and providing a future-proof service means accepting that video technology stack components are not only going to change in functionality but also in their very nature. Moreover, when you decide to migrate from one instantiation, like hardware, to another, like the cloud, you can’t just cut and run. The existing technologies must remain running until the newer version has been proven and can be integrated into existing monitoring frameworks (such as a monitoring harness). When that’s done, the old versions can become part of a redundancy strategy so that viewers are never left without the content they pay for.

The cloud is becoming an intrinsic part of the streaming technology stack. There is no reason that it shouldn’t become integral to monitoring as well.

It’s time to embrace cloud monitoring. Are you ready?

It’s clear that times have changed with respect to how we can monitor streaming workflow components. Not only can we get data about both physical and virtualised elements, but, by leveraging the cloud, we can even get data from third-party providers such as CDNs. Furthermore, if you don’t want to build it yourself, Touchstream has a solution called VirtualNOC that can get your network operations engineers the data they need to take quick action against problems that cause subscriber loss. With powerful features like a data rewind, allowing you to trace a session all the way back through the workflow to identify where it failed, you’ll never be in more control of your streaming monitoring.

Moving your streaming workflow to the cloud is a necessity, but don’t take it lightly

Transitioning streaming workflows to the cloud has emerged as an effective solution to handling the rapid rise of streaming viewers. Yet, it requires careful analysis and planning to develop a migration strategy that is customised to your needs and ensures the most important thing: consistent, high QoE at all times. This makes live stream monitoring paramount to your success.

To find out how to scale your monitoring operations with Touchstream’s VirtualNOC, download our Monitoring Harness White Paper now.